Lets create the following file

redis-cluster.yml

apiVersion: v1

kind: Template

metadata:

name: ${NAME}

labels:

name: ${NAME}

annotations:

description: Redis master/slave templates based on Statefulsets

parameters:

- name: NAME

description: Application name

required: true

value: "redis"

- name: CPU

description: "Number of CPUs per node in milli cores"

required: true

value: "500m"

- name: RAM

description: "RAM size per node in gigabytes"

required: true

value: "1Gi"

- name: STORAGE

description: "STORAGE size per node in gigabytes"

required: true

value: "1Gi"

- name: DOCKER_PATH_AND_IMAGE

description: "Docker Image"

required: true

value: "redis:6.0.8"

objects:

- apiVersion: v1

kind: ConfigMap

metadata:

name: redis-cluster

data:

update-node.sh: |

#!/bin/sh

REDIS_NODES="/data/nodes.conf"

sed -i -e "/myself/ s/[0-9]\{1,3\}\.[0-9]\{1,3\}\.[0-9]\{1,3\}\.[0-9]\{1,3\}/${POD_IP}/" ${REDIS_NODES}

exec "$@"

redis.conf: |+

cluster-enabled yes

cluster-require-full-coverage no

cluster-node-timeout 15000

cluster-config-file /data/nodes.conf

cluster-migration-barrier 1

appendonly yes

protected-mode no

maxmemory 1449551462

- apiVersion: apps/v1

kind: StatefulSet

metadata:

name: redis-cluster

spec:

serviceName: redis-cluster

replicas: 6

selector:

matchLabels:

app: redis-cluster

template:

metadata:

labels:

app: redis-cluster

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "9121"

spec:

containers:

# deploy the redis_exporter as a sidecar to a Redis instance.

- name: redis-exporter

image: oliver006/redis_exporter:v1.14.0-alpine

ports:

- name: exporter

containerPort: 9121

- name: redis

image: ${DOCKER_PATH_AND_IMAGE}

resources:

limits:

memory: "1.5Gi"

requests:

memory: "1.5Gi"

ports:

- name: client

containerPort: 6379

- name: gossip

containerPort: 16379

command: ["/conf/update-node.sh", "redis-server", "/conf/redis.conf"]

livenessProbe:

tcpSocket:

port: client # named port

initialDelaySeconds: 30

timeoutSeconds: 5

periodSeconds: 5

failureThreshold: 5

successThreshold: 1

readinessProbe:

exec:

command:

- redis-cli

- ping

initialDelaySeconds: 10

timeoutSeconds: 5

periodSeconds: 3

env:

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

volumeMounts:

- name: conf

mountPath: /conf

readOnly: false

- name: redis-data

mountPath: /data

readOnly: false

volumes:

- name: conf

configMap:

name: redis-cluster

defaultMode: 0755

volumeClaimTemplates:

- metadata:

name: redis-data

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 3Gi

- apiVersion: v1

kind: Service

metadata:

name: redis-cluster

spec:

type: ClusterIP

ports:

- port: 6379

targetPort: 6379

name: client

- port: 16379

targetPort: 16379

name: gossip

selector:

app: redis-cluster

Execute the following in openshift

oc login openshift-url.com -u **** -p **** --insecure-skip-tls-verify=true

oc project demo-project

oc process -f redis-cluster.yml -p DOCKER_PATH_AND_IMAGE=redis:6.0.8 -p NAME=redis

Go to one of the Pods terminal and execute as follows

redis-cli --cluster create 10.130.73.13:6379 10.131.22.237:6379 10.130.92.206:6379 10.131.2.92:6379 10.131.91.230:6379 10.130.56.223:6379 --cluster-replicas 1

Getting IP addresses in powershell

oc get pods --selector app=redis-cluster -o jsonpath='{range.items[*]}{.status.podIP}:6379 '

Kubectl command

$ kubectl exec -it redis-cluster-0 -- redis-cli --cluster create --cluster-replicas 1 $(kubectl get pods -l app=redis-cluster -o jsonpath='{range.items[*]}{.status.podIP}:6379 ')

$ redis-cli --cluster create 10.130.73.13:6379 10.131.22.237:6379 10.130.92.206:6379 10.131.2.92:6379 10.131.91.230:6379 10.130.56.223:6379 --cluster-replicas 1

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 10.131.91.230:6379 to 10.130.73.13:6379

Adding replica 10.130.56.223:6379 to 10.131.22.237:6379

Adding replica 10.131.2.92:6379 to 10.130.92.206:6379

M: a79184a44655c69dd5233a8ec8f70ab84d757898 10.130.73.13:6379

slots:[0-5460] (5461 slots) master

M: 56d0dbc4affd306903c69264a7b0cab91664eea1 10.131.22.237:6379

slots:[5461-10922] (5462 slots) master

M: 508082b33224e3d6c6a3b1132fd7555008f8bf5b 10.130.92.206:6379

slots:[10923-16383] (5461 slots) master

S: 608fe5d82cd4d3b2cffa29a7fad9d80b95d44d70 10.131.2.92:6379

replicates 508082b33224e3d6c6a3b1132fd7555008f8bf5b

S: 8c8987b67092d03f910c9d16b2c90f8129bb7f19 10.131.91.230:6379

replicates a79184a44655c69dd5233a8ec8f70ab84d757898

S: dde7aa10861f4ace6db54e4949f85dd6115bdf90 10.130.56.223:6379

replicates 56d0dbc4affd306903c69264a7b0cab91664eea1

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

.

>>> Performing Cluster Check (using node 10.130.73.13:6379)

M: a79184a44655c69dd5233a8ec8f70ab84d757898 10.130.73.13:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: 608fe5d82cd4d3b2cffa29a7fad9d80b95d44d70 10.131.2.92:6379

slots: (0 slots) slave

replicates 508082b33224e3d6c6a3b1132fd7555008f8bf5b

M: 508082b33224e3d6c6a3b1132fd7555008f8bf5b 10.130.92.206:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

M: 56d0dbc4affd306903c69264a7b0cab91664eea1 10.131.22.237:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: 8c8987b67092d03f910c9d16b2c90f8129bb7f19 10.131.91.230:6379

slots: (0 slots) slave

replicates a79184a44655c69dd5233a8ec8f70ab84d757898

S: dde7aa10861f4ace6db54e4949f85dd6115bdf90 10.130.56.223:6379

slots: (0 slots) slave

replicates 56d0dbc4affd306903c69264a7b0cab91664eea1

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

$

$ redis-cli --cluster check 10.130.73.13:6379

| POD | IP | Role |

| redis-cluster-0 | 10.130.73.13 | M1 |

| redis-cluster-1 | 10.131.22.237 | M2 |

| redis-cluster-2 | 10.130.92.206 | M3 |

| redis-cluster-3 | 10.131.2.92 | S3 |

| redis-cluster-4 | 10.131.91.230 | S1 |

| redis-cluster-5 | 10.130.56.223 | S2 |

$ redis-cli cluster info

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:6

cluster_my_epoch:1

cluster_stats_messages_ping_sent:328

cluster_stats_messages_pong_sent:333

cluster_stats_messages_sent:661

cluster_stats_messages_ping_received:328

cluster_stats_messages_pong_received:328

cluster_stats_messages_meet_received:5

cluster_stats_messages_received:661

$

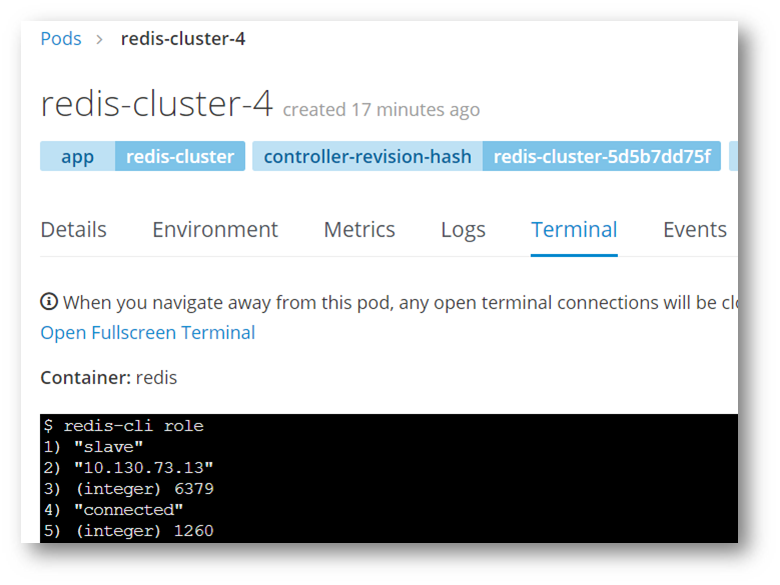

Check Roles

$ redis-cli role

1) "master"

2) (integer) 574

3) 1) 1) "10.131.91.230"

2) "6379"

3) "574"

Kubectl commands

$ for x in $(seq 0 5); do echo "redis-cluster-$x"; kubectl exec redis-cluster-$x -- redis-cli role; echo; done

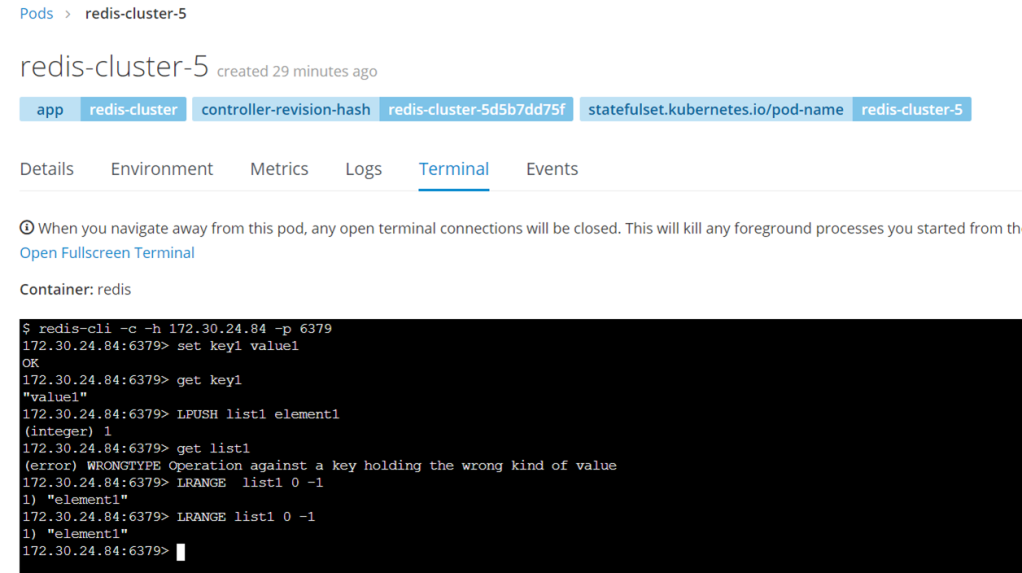

Testing the Cluster

Get the service ip address, and connect from one the pods

redis-cli -c -h 172.30.24.84 -p 6379

or

redis-cli -c -h redis-cluster -p 6379

$ redis-cli -c -h 172.30.24.84 -p 6379

172.30.24.84:6379> set key1 value1

OK

172.30.24.84:6379> get key1

"value1"

172.30.24.84:6379> LPUSH list1 element1

(integer) 1

172.30.24.84:6379> LRANGE list1 0 -1

1) "element1"

172.30.24.84:6379> LRANGE list1 0 -1

1) "element1"

172.30.24.84:6379>

The two keys that we have inserted are with M2 ( redis-cluster-1 10.131.22.237 )

$ redis-cli --cluster check 10.130.73.13:6379

10.130.73.13:6379 (a79184a4...) -> 0 keys | 5461 slots | 1 slaves.

10.130.92.206:6379 (508082b3...) -> 0 keys | 5461 slots | 1 slaves.

10.131.22.237:6379 (56d0dbc4...) -> 2 keys | 5462 slots | 1 slaves.

[OK] 2 keys in 3 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 10.130.73.13:6379)

M: a79184a44655c69dd5233a8ec8f70ab84d757898 10.130.73.13:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: 608fe5d82cd4d3b2cffa29a7fad9d80b95d44d70 10.131.2.92:6379

slots: (0 slots) slave

replicates 508082b33224e3d6c6a3b1132fd7555008f8bf5b

M: 508082b33224e3d6c6a3b1132fd7555008f8bf5b 10.130.92.206:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

M: 56d0dbc4affd306903c69264a7b0cab91664eea1 10.131.22.237:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: 8c8987b67092d03f910c9d16b2c90f8129bb7f19 10.131.91.230:6379

slots: (0 slots) slave

replicates a79184a44655c69dd5233a8ec8f70ab84d757898

S: dde7aa10861f4ace6db54e4949f85dd6115bdf90 10.130.56.223:6379

slots: (0 slots) slave

replicates 56d0dbc4affd306903c69264a7b0cab91664eea1

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

$

Lets see what happens if we shutdown redis-cluster-1

$ redis-cli --cluster check 10.130.73.13:6379

10.130.73.13:6379 (a79184a4...) -> 0 keys | 5461 slots | 1 slaves.

10.130.92.206:6379 (508082b3...) -> 0 keys | 5461 slots | 1 slaves.

10.130.56.223:6379 (dde7aa10...) -> 2 keys | 5462 slots | 1 slaves.

[OK] 2 keys in 3 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 10.130.73.13:6379)

M: a79184a44655c69dd5233a8ec8f70ab84d757898 10.130.73.13:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: 608fe5d82cd4d3b2cffa29a7fad9d80b95d44d70 10.131.2.92:6379

slots: (0 slots) slave

replicates 508082b33224e3d6c6a3b1132fd7555008f8bf5b

M: 508082b33224e3d6c6a3b1132fd7555008f8bf5b 10.130.92.206:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 56d0dbc4affd306903c69264a7b0cab91664eea1 10.130.99.28:6379

slots: (0 slots) slave

replicates dde7aa10861f4ace6db54e4949f85dd6115bdf90

S: 8c8987b67092d03f910c9d16b2c90f8129bb7f19 10.131.91.230:6379

slots: (0 slots) slave

replicates a79184a44655c69dd5233a8ec8f70ab84d757898

M: dde7aa10861f4ace6db54e4949f85dd6115bdf90 10.130.56.223:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

$

redis-cluster-5 10.130.56.223 which was initially slave has become Master and redis-cluster-1 10.131.22.237 which was initially Master has now become Slave, We have retained the two keys

$ redis-cli -c -h redis-cluster -p 6379

redis-cluster:6379> get key1

"value1"

redis-cluster:6379>

one of the nodes.conf file

$ cd /data

$ ls

appendonly.aof dump.rdb nodes.conf

$ cat nodes.conf

608fe5d82cd4d3b2cffa29a7fad9d80b95d44d70 10.131.2.92:6379@16379 slave 508082b33224e3d6c6a3b1132fd7555008f8bf5b 0 1601563224000 3 connected

508082b33224e3d6c6a3b1132fd7555008f8bf5b 10.130.92.206:6379@16379 master - 0 1601563224475 3 connected 10923-16383

56d0dbc4affd306903c69264a7b0cab91664eea1 10.131.22.237:6379@16379 master - 0 1601563223000 2 connected 5461-10922

8c8987b67092d03f910c9d16b2c90f8129bb7f19 10.131.91.230:6379@16379 slave a79184a44655c69dd5233a8ec8f70ab84d757898 0 1601563225476 1 connected

dde7aa10861f4ace6db54e4949f85dd6115bdf90 10.130.56.223:6379@16379 slave 56d0dbc4affd306903c69264a7b0cab91664eea1 0 1601563224000 2 connected

a79184a44655c69dd5233a8ec8f70ab84d757898 10.130.73.13:6379@16379 myself,master - 0 1601563222000 1 connected 0-5460

vars currentEpoch 6 lastVoteEpoch 0